It’s unlikely Kodak’s Bryce Bayer had any idea that, 40 years after patenting a ‘Color Imaging Array’ that his design would underpin nearly all contemporary photography and live in the pockets of countless millions of people around the world.

|

It seems so obvious, once someone else has thought of it, but capturing red, green and blue information as an interspersed, mosaic-style array was breakthrough.

Image: based on original by Colin M.L Burnett |

The Bayer Color Filter Array is a genuinely brilliant piece of design: it’s a highly effective way of capturing color information from silicon sensors that can’t inherently distinguish color. Most importantly, it does a good job of achieving this color capture while still capturing a good level of spatial resolution.

However, it isn’t entirely without its drawbacks: It doesn’t capture nearly as much color resolution as a camera’s pixel count seems to imply, it’s especially prone to sampling artifacts and it throws away a lot of light. So how bad are these problems and why don’t they stop us using it?

Resolution

There’s a limit to how much resolution you can capture with any pixel-based sensor. Sampling theory dictates that a system can only perfectly reproduce signals at half the sampling frequency (a limit known as the Nyquist Frequency). If you think about trying to represent a single pixel-width black line, you need at least two pixels to be sure of representing it properly: one to capture the line and another to capture the not-line.

Just to make things more tricky, this assumes your pixels are aligned perfectly with the line. If they’re slightly misaligned, you may get two grey pixels instead. This is taking into consideration by the Kell factor, which says that you’ll actually only reliably capture resolution around 0.7x your Nyquist frequency.

|

| A sensor capturing detail at every pixel can perfectly represent data at up to 1/2 of its sampling frequency, so 4000 vertical pixels can represent 2000 cycles (or 2000 line pairs as we’d tend to think of it). This is a fundamental rule of sampling theory. |

But, of course, a Bayer sensor doesn’t sample all the way to its maximum frequency because you’re only sampling single colors at each pixel, then deriving the other color values from neighboring pixels. This lowers resolution (effectively slightly blurring the image).

So, with these two factors (the limitations of sampling and Bayer’s lower sampling rate) in mind, how much resolution should you expect from a Bayer sensor? Since human vision is most sensitive to green information, it’s the green part of a Bayer sensor that’s used to provide most of the spatial resolution. Let’s have a look at how it compares to sampling luminance information at every pixel.

|

| Counter-intuitive though it may sound, the green channel captures just as much horizontal and vertical detail as the sensor capturing data at every pixel. Where it loses out is on the diagonals, which sample at 1/2 the frequency. |

Looking at just the green component, you should see that a Bayer sensor can still capture the same horizontal and vertical green (and luminance) information as a sensor sampling every pixel. You lose something on the diagonals, but you still get a good level of detail capture. This is a key aspect of what makes Bayer so effective.*

|

| Red and blue information is captured at much lower resolutions than green. However, human vision is more sensitive to luminance (brightness) information than chroma (color) information, which makes this trade-off visually acceptable in most circumstances. |

It’s a less good story when we look at the red and blue channels. Their sampling resolution is much lower than the luminance detail captured by the green channel. It’s worth bearing in mind that human vision is much more sensitive to luminance resolution than it is to color information, so viewers are likely to be more tolerant of this shortcoming.

Aliasing

So what happens to everything above the Nyquist frequency? Well, unless you do something to stop it, your camera will try to capture this information, then present it in a way it can represent. A process called aliasing.

Think about photographing a diagonal black stripe with a low resolution camera. Even with a black and white camera, you risk the diagonal being represented as a series of stair steps: a low-frequency pattern that acts as an ‘alias’ for the real pattern.

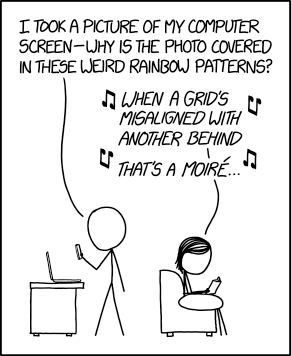

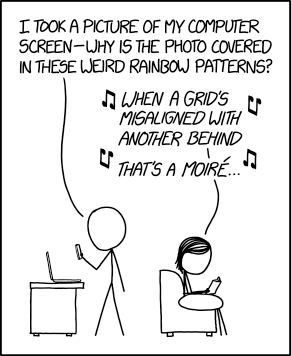

The same thing happens with fine repeating patterns that are a higher frequency than your sensor can cope with: they appear as spurious aliases of the real pattern. These spurious patterns are known as moiré. This isn’t unique to Bayer, though, it’s a side-effect of trying to capture higher frequencies than your sampling can cope with. It will occur on all sensors that use a repeating pattern of pixels to capture a scene.

|

| Source: XKCD |

Sensors that use the Bayer pattern are especially prone to aliasing though, because the red and blue channels are being sampled at much lower frequencies than the full pixel count. This means there are two Nyquist frequencies (a green/luminance limit and a red/blue limit) and two types of aliasing you’ll tend to encounter: errors in detail too fine for the sensor to correctly capture the pattern of and errors in (much less fine) detail that the camera can’t correctly assess the color of.

‘the Bayer pattern is especially prone to aliasing’

To reduce this first kind of error most cameras have, historically, included Optical Low Pass Filters, also known as Anti-Aliasing filters. These are filters mounted in front of the sensor that intentionally blur light across nearby pixels, so that the sensor doesn’t ever ‘see’ the very high frequencies that it can’t correctly render, and doesn’t then misrepresent them as aliasing.**

|

|

| The point at the center of the Siemens star is too fine for this monochrome camera to represent, so it’s produced a spurious diamond-shaped ‘alias’ at the center instead. |

This image second was shot with a very high resolution camera, blurred to remove high frequencies, then downsized to the same resolution as the first shot. It still can’t accurately represent the star, but doesn’t alias when failing. |

These aren’t so strong as to completely prevent all types of aliasing (very few people would be happy with a filter that blurred the resolution down to 1/4 of the pixel height: the Nyquist frequency of red and blue capture), instead they blur the light just enough to avoid harsh stair-stepping and reduce the severity of the false color on high-contrast edges.

|

|

| With a Bayer filter, you get a fun color component to this aliasing. Not only has the camera tried to capture finer detail than its sensor can manage, you get to see the side-effect of the different resolutions the camera captures each color with. |

Again, if you compare this with a significantly over-sampled image, blurred then downsized, you don’t see this problem. However, look closely you can still see traces of the false color that occurred at the much higher frequency this camera was shooting at. |

This means that, a camera with an anti-aliasing filter, you shouldn’t see as much false color in the high-contrast mono targets within our test scene, but it’ll do nothing to prevent spurious (aliased) patterns in the color resolution targets.

|

|

| Even with an anti-aliasing filter, you’ll still get aliasing of color detail, because the maximum frequency of red or blue that can be captured is much lower. |

This image was shot at the same nominal resolution but with red, green and blue information captured for each output pixel: showing how the target could appear, with this many pixels. |

Light loss

At the silicon level, modern sensors are pretty amazing. Most of them operate at an efficiency (the proportion of light energy converted into electrons) around 50-80%. This means there’s less than 1EV of performance improvement to be had in that respect, because you can’t double the performance of something that’s already over 50% effective. However, before the light can get to the sensor, the Bayer design throws away around 1EV of light, because each pixel has a filter in front of it, blocking out the colors it’s not meant to be measuring.

‘The Bayer design throws away

around 1EV of light’

This is why Leica’s ‘Monochrom’ models, which don’t include a color filter array, are around one stop more sensitive than their color-aware sister models. (And, since they can’t produce false color at high-contrast edges, they don’t include anti aliasing filters, either).

It’s this light loss component that may eventually spell the end of the Bayer pattern as we know it. For all its advantages, Bayer’s long term dominance is probably most at risk if it gets in the way of improved low-light performance. This is why several manufacturers are looking for alternatives to the Bayer pattern that allow more light through to the sensor. It’s telling, though, that most of these attempts are essentially variations on the Bayer theme, rather than total reinventions.

The alternatives

These variations aren’t the only alternatives to the Bayer design, of course.

Sigma’s Foveon technology attempts to measure multiple colors at the same location, so promises higher color resolution, no light loss to a color filter array and less aliasing. But, while these sensors are capable of producing very high pixel-level sharpness, this currently comes at an even greater noise cost (which limits both dynamic range and low light performance), as well as struggling to compete with the color reproduction accuracy that can be achieved using well-tuned colored filters. More recent versions reduce the color resolution of two of their channels, sacrificing some of their color resolution advantage for improved noise performance.

‘The worst form… except all those others that have been tried’

Meanwhile, Fujifilm has struck out on its own, with the X-Trans color filter pattern. This still uses red, green and blue filters but features a larger repeat unit: a pattern that repeats less frequently, to reduce the risk of it clashing with the frequency it’s trying to capture. However, while the demosaicing of X-Trans by third-party software is improving, and the processing power needed to produce good-looking video looks like it’s being resolved, there are still drawbacks to the design.

Ironically, devoting so much of the sensor to green/luminance capture appears to have the side-effect of reducing its ability to capture and represent foliage (perhaps because it lacks the red and blue information required to render the subtle tint of different greens).

Which leaves Bayer in a situation akin to Winston Churchill’s take on Democracy as: ‘the worst form of Government except all those other forms that have been tried from time to time.’

40 not out

As we’ve seen before, the sheer amount of effort being put into development and improvement of Bayer sensors and their demosaicing is helping them overcome the inherent disadvantages. Higher pixel counts keep pushing the level of color detail that can be resolved, despite the 1/2 green, 1/4 red, 1/4 blue capture ratio.

And, because the frequencies that risk aliasing relate to the sampling frequency, higher pixel count sensors are showing increasingly little aliasing. The likelihood of you encountering frequencies high enough to cause aliasing falls as your pixel count helps you resolve more and more detail.

Add to this the fact that lenses can’t perfectly transmit all the detail that hits them, and you start to reach the point that the lens will effectively filter-out the very high frequencies that would otherwise induce aliasing. At present, we’ve seen filter-less full frame sensors of 36MP, APS-C sensors of 24MP and Four Thirds sensors of 16MP, all of which are sampling their lenses at over 200 pixels per mm, and these only produce significant moiré when paired with very sharp lenses shot wide-enough open that diffraction doesn’t end up playing the anti-aliasing role.

So, despite the cost of light and of color resolution, and the risk of error, Bryce Bayer’s design remains firmly at the heart of digital photography, more than 40 years after it was first patented.

Thanks are extended to DSPographer for sanity-checking an early draft and to Doug Kerr, whose posts helped inform the article, who inspired the diagrams and who was hugely supportive in getting the article to a publishable state.

* Unsurprisingly, some manufacturers have tried to take advantage of this increased diagonal resolution by effectively rotating the pattern by 45°: this isn’t commonplace enough to derail this article with such trickery, so we’ll label them ‘witchcraft’ and carry on as we were.

** The more precocious among you may be wondering ‘but wouldn’t your AA filter need to attenuate different frequencies for the horizontal, vertical and diagonal axes?’ Well, ideally, yes, but it’s easier said than done and far beyond the scope of this article.

Articles: Digital Photography Review (dpreview.com)