|

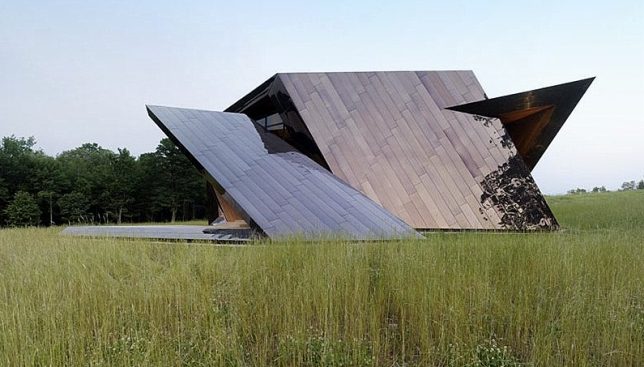

| The Nokia OZO is a state of the art VR camera. |

Virtual reality, or VR, has the potential to redefine the way we interact with images, including still images, movies, and other forms of visual storytelling. It’s already being adopted by major news organizations to take you deeper into stories, Hollywood studios that want to generate more immersive entertainment, and content creators who want to share experiences that don’t work as effectively on a flat screen.

VR is still at a stage where it’s mostly of interest to early adopters, but it’s an exciting time to get involved with this new medium. In particular, it’s great time for photographers and filmmakers to start thinking about VR as this technology will likely impact the way we share our work, tell stories, and even remain competitive in business over the next several years.

Timing is everything

My first VR experience came many years ago when a technology incubator next to the molecular biology lab where I worked asked for volunteers to test a new ‘human interface technology.’ I found myself standing in a room full of computers, wearing a large headset with wires hanging out, and with something that looked like a hockey glove on my hand. A nerdy grad student was on hand to guide me through a virtual world.

The graphics in this world consisted of nothing more than rooms full of poorly shaded spheres, cubes, and cylinders. There was no illusion of reality, but I could navigate through doors and wonder around. I later discovered that the grad student was actually from the psychology department and that I was, for all intents and purposes, a lab rat trying to find my cheese in a virtual maze. The grad student never revealed whether I did a better job of navigating the maze than the real rat, but the VR experience stuck in my mind. My gut told me it had potential.

“I was, for all intents and purposes, a lab rat trying to find my cheese in a virtual maze…”

As Mark Banas’ recent article discusses, and as my own ersatz rat experience confirms, VR has been around for a while and has even enjoyed some success, particularly in the gaming world. However, the technology behind VR may finally be sophisticated enough to give it a fighting chance of being useful to a wider audience, particularly photographers and filmmakers. When I discuss VR in this article I’ll be specifically referring to VR in this context – for photographers, filmmakers, and also visual storytellers.

I’m not a hard core computer gamer or a rabid VR enthusiast; it’s likely that some of you reading this article know a lot more about VR than I do. However, I suspect I’m fairly representative of the typical photographer/filmmaker who’s followed VR from afar with a healthy bit of skepticism, waiting for someone to make a convincing argument that VR is relevant to me.

|

| VR has enjoyed some success in the gaming world, but as a visual storyteller I’ve been waiting for someone to demonstrate how VR is relevant to me. |

That’s not to say that I haven’t experimented with VR, it’s just that until recently it never seemed terribly compelling to me as a content creator. Almost every VR experience I tried boiled down the same basic formula:

- Videographer places a VR camera in an iconic location and captures video from a single spot.

- Viewer puts on a headset and watches video until he or she gets bored.

At this point it seems like I’ve (virtually) stood around a lot of places: the pyramids of Egypt, next to the Eiffel Tower, Machu Picchu… you get the idea. But the key words are ‘stood around’. The experience can be interesting at first, but after about 30 seconds you’ve spun in a circle, looked up and down, and pretty much seen all there is to see.

But that’s not what you do when you go to one of these places, in real life. You want to explore, to learn something, to understand the story of the people or the place that you’re visiting.

|

| Viewing an iconic place, such as Machu Picchu, using VR can be interesting. However, unless you show your audience something unique, help them understand the place, or immerse them in a compelling experience, they will quickly lose interest. |

Virtual Experiences

For VR to gain any type of traction it needs to go beyond this ‘stand there and look around’ model – and, fortunately, it has. This was particularly noticeable at the NAB trade show in April where VR technology appeared to be everywhere. There was even a Virtual and Augmented Reality Pavilion that served as a hub for numerous VR companies, including makers of capture devices, display systems, and even content creators.

My personal VR epiphany occurred at a technology showcase run by Kaleidoscope VR, a VR studio. In a roped off area dozens of people sat in chairs spread across the floor, each engrossed in some virtual world. What set the experience apart from most other VR demos I’ve seen was that the focus was on putting viewers into immersive stories and experiences.

|

| Visitors trying virtual reality at the Kaleidoscope VR showcase. |

‘Content is King’ may be one of the most overused phrases in modern media, but it keeps getting recycled because it’s fundamentally true. Lack of good content is why VR always seemed dull or gimmicky to me in the past, but my experience at the VR Showcase proved that with the right content VR can be incredibly compelling.

The first ‘film’ I selected was a VR experience called ‘Notes on Blindness: Into Darkness.’ Based on the audio diaries of a man named John Hull, who recorded hours of observations about how he learned to ‘see’ the world through sound after losing his sight in 1983, ‘Notes on Blindness’ isn’t, strictly speaking, an actual film. (It is, after all, an effort to help the viewer understand what it’s like to be blind.) Instead, it uses audio and 3D animations that mimic the real world.

Each scene begins mostly in darkness, accompanied by Hull’s narration of where he is and what he’s hearing. As different sounds enter the space, he describes them and indicates directionality, using phrases like “Behind my right shoulder I hear a car starting,” or “To my left I hear somebody running,” in a way that prompts you to move your head around to look. In essence, he’s directing you even if you don’t realize it. After a while you discover that Hull is able to draw a mental picture of what’s around him based on subtle cues such as the different sounds raindrops make when hitting objects, like a window or a teacup. As he speaks, scenes are gradually revealed in a manner reminiscent of The Matrix, but also rely on your imagination to complete the mental image.

I hope I never experience real blindness, however for the first time in my life I feel like I might have a very basic understanding of what it’s like for a blind person to try to ‘see’ the world using their other senses. The experience was more powerful than I anticipated.

The trailer for ‘Notes on Blindness’ (above) will give you a rough idea of what I’m trying to describe here. The VR experience will be available for download on June 30 if you want to try it yourself.

I also viewed ‘Witness 360: 7/7,’ a VR film that follows the experience of Jacqui Putnam, a commuter on the London Tube during the terrorist bombings of July 7, 2005. Shot documentary style, you see the places Jacqui went that day, including riding on the Tube itself, and hear her vivid descriptions of what happened. When she mentions something like “The person sitting next to me,” you turn and, sure enough, there’s a person sitting next to you that roughly matches her description.

The experience was more tense than I expected. I knew what was going to happen, and yet as I stood there on the train next to everybody else – real people who just happened to be on the Tube – I kept thinking to myself ‘These people are about to die.’ The fact that I could look around and feel immersed in the situation, able to see the things Jacqui was describing, generated a visceral reaction that I’m not used to feeling while watching a documentary. It felt personal.

Despite very different subjects and creative approaches, both these VR experiences had one critical thing in common: neither one would have worked as effectively on a flat screen. They depended on a VR environment to achieve their impact.

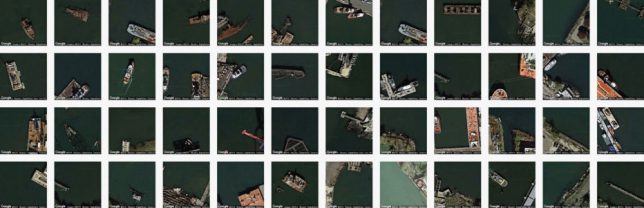

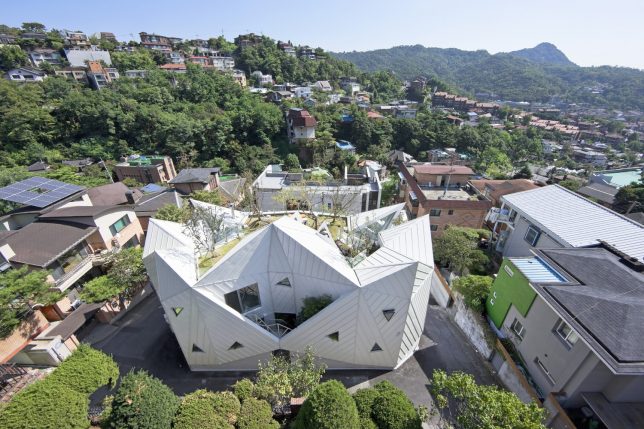

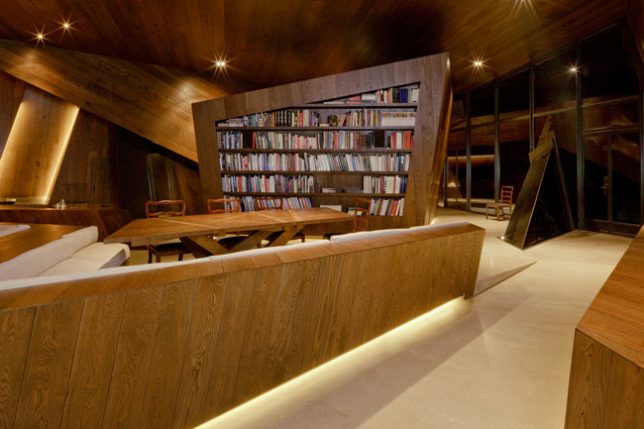

By now you’re probably asking how still photography fits into the VR world. Quite nicely, it turns out. Of course, the obvious applications are things like real estate photography, where 360º views can be critically important to attracting eyeballs. The real estate industry has been finding ways of doing this for years already, and new tools will only make the experience better and smoother. But it’s the creative possibilities that are really interesting.

One thing photography has always been good at is closing distance, i.e. taking you to a faraway place you may not be able to visit in person. It’s the reason we know what most of the world looks like despite never having been to most of it. VR has the potential to take this a step further. In the same way that color photography allowed us to see places differently than we could in black and white, VR will allow us to see places in an immersive way that we can’t experience with a two dimensional picture. I’m not suggesting the VR is better than a still photo any more than I would suggest that a color photo is better than a black and white one. My point is that they are different, and each allows us to experience the world in a way the others don’t.

|

|

Nice kitty. Imagine using a VR camera to put your viewer right into the middle of a pride of lions.

Photo: Jeff Keller

|

The key to VR still photography will be figuring out how to leverage the strengths of the medium. For example, most people enjoy a great landscape or wildlife photo. If you show me a beautiful Serengeti landscape with a lion in it I’ll probably love the photo. However, if you show me that same landscape in VR it might not be as compelling since I can’t see it all at once. However, if you let me stand right in the middle of a pride of lions eating a wildebeest you’ll get my attention, because that’s something I haven’t experienced in a normal photo.

What all of these examples highlight is how important it will be for artists to take new and different approaches to capturing, editing, and presenting their work. It’s an open canvas, and one that’s still largely undefined.

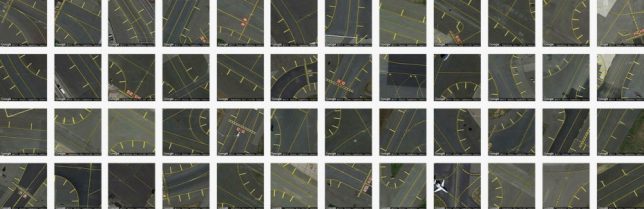

VR Requires New Grammar

As Oscar-winning cinematographer Emmanuel Lubezki told us a couple months ago, the grammar and language of VR have yet to be written. This is true in a literal sense. Words like ‘framing’ and ‘panning’ simply don’t apply to VR. New words are needed, and nobody has agreed on what those words are yet. The one thing they do agree on is that VR will require different approaches appropriate to the medium.

This can be seen in the films described above, particularly ‘Witness.’ For example, the conventional documentary formula is to intercut interview footage with b-roll, but that never happens in ‘Witness.’ The convention works in flat films because you can lock the viewer into a rectangular frame and demand their attention. But what happens when the viewer has the freedom to look anywhere they want? Maybe they will get distracted by a picture hanging on the wall, or something happening outside a window. ‘Witness’ solves this by relying entirely on voiceover while featuring a few location shots of Jacqui Putnam throughout the film. This is just one example of where traditional filmmaking techniques don’t translate easily to VR, and there are many others.

It isn’t the first time content creators have faced this challenge. In the early days of television, studios often tried to repackage shows made for radio into TV, such as American soap operas. Daytime soap operas on radio were aimed at homemakers who could listen while working around the house. Studio executives had reservations about whether soap operas would even work on TV since they would require the homemaker to actually watch a screen.

|

|

Early soap operas were produced for radio; when TV came along producers had to figure out how to take advantage of the new medium.

By Photo by G. Nelidoff, Chicago, for CBS/Columbia Broadcasting Company. (Library of Congress) [Public domain], via Wikimedia Commons

|

Some production elements from radio didn’t translate well to TV. For example, producers had to re-think product placement and advertisements in shows – the very reason for the existence of soap operas in the first place – because having an actress pick up a box of laundry detergent and talk about its virtues in the middle of a scene just didn’t seem believable on TV. It took a few years before the industry perfected the formula.

The reason I point this out is because we’re still in the early days of VR. It’s easy to look at VR as it exists now and think of it as a gimmick, a tool for gamers, or a toy for tech nerds. And that’s OK – people thought similar things about TV at one point, but once content creators figured out how to effectively use the medium there was no turning back. I suspect the same will be true of VR: once the language of VR is fully developed, and hardware for consuming content becomes more convenient (it will), there’s a lot of opportunity to do creative things that may not work on a flat screen.

Why VR is not 3D Television

I mentioned above that VR seemed to be everywhere at trade shows like CES and NAB this year. That’s an encouraging sign, but it’s worth noting that ubiquity of a technology at an industry trade show does not equate to commercial success. I need only mention 3D television to make my point, and several people have dismissed VR to me as just ‘the next 3D TV.’

I believe VR is a much more promising technology than 3D television, and will ultimately be more successful, for a couple of important reasons.

|

| One advantage of VR compared to 3D TV is that viewers can at least try it with an inexpensive viewing device, such as Google Cardboard, and a smartphone. |

3D television struggled with a classic chicken-and-egg situation. Networks were reluctant to invest in infrastructure to produce 3D content without some assurance that there would be a critical mass of audience; consumers were reluctant to invest in $ 1,000+ devices without some assurance that content would be available. For studios, this was potentially a very expensive experiment that carried a lot of financial risk. Also, many consumers had only recently upgraded to HDTV, and it was a tough sell to convince them to invest in new hardware so soon. By now everyone knows how this ended.

The stakes around VR are different. First, most VR content is being distributed through platforms like YouTube or on mobile devices where production standards are less stringent than for broadcast television. VR also has the advantage that the lowest common denominator for viewing content is the smartphone, meaning that most consumers already have a screen on which to watch content (alone or when combined with an inexpensive viewing device).

|

|

The lowest common denominator for viewing VR is the phone most of us already have in our pocket.

Photo: Dale Baskin Photography

|

On the production side of the equation, low cost capture devices ranging in price from a few hundred to a few thousand dollars are easily accessible to content creators. It’s not an unmanageable risk for someone running a successful YouTube channel, or even an indie filmmaker, to invest a small amount of money to try the technology. Similarly, it’s easy to envision news media such as The New York Times, USA Today, or even your local TV station sending reporters into the field with a $ 1,000 VR camera to bring immersive experiences to their apps and web pages.

In one particularly telling move, last November The New York Times sent free Google Cardboard to all of their print subscribers – over one million of them – to insure that they could use The Times’ new VR app. The Times followed up a few weeks ago by announcing that they would also send free Cardboard to many of their digital subscribers as well. When the barrier to entry is so low that a content producer can afford to give all their subscribers a free device on which to consume their content it’s a great indication of how accessible the technology can be.

|

| In November the New York Times sent Google Cardboard to all their print subscribers, and a few weeks ago announced similar plans for digital subscribers. |

Are we there yet?

At this point I probably sound pretty enthusiastic about VR – which I am – but I’ll also provide a reality check and let you know that we’re not quite there yet in terms of the technology.

VR depends on belief; the belief that you’re somewhere you’re not. One of the things you figure out really fast with VR is that in order for it to be believable, every part of the experience must work. This includes image quality, the general viewing experience, audio, and even the space you’re in and how you interact with it. If any part of the experience is incomplete or breaks, then the experience becomes less believable.

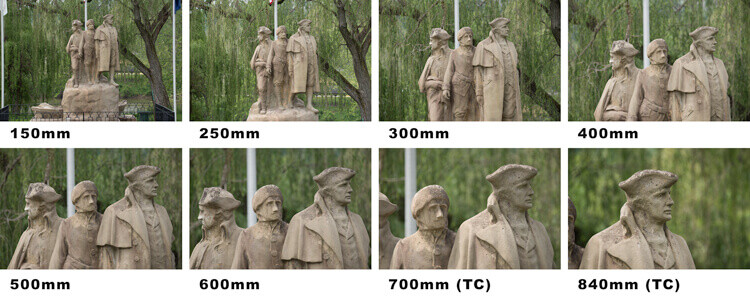

As photographers, you’ll notice this immediately when it comes to picture quality. We’ve become spoiled by high resolution, high dynamic range sensors that are almost magic relative to what we had just a decade ago. VR cameras aren’t there yet. Resolution is limited (usually 4K, but spread across the entire 360 degree field of view), highlights may be blown or shadows lost, and the richness of color we’re used to just isn’t there. However, in the same way that early digital photographers managed to create great photos with 2MP and 3MP cameras, VR content creators are finding ways to work within the limits of their tools.

|

| One of the most accessible VR cameras available today is the Ricoh Theta (above) which lists for $ 260. Alternatively, the Nokia OZO (seen at the top of the article) lists for $ 60,000. The one thing they both have in common is that neither captures the same resolution, dynamic range, or richness of color we’re used to with modern ‘2D’ cameras. |

Viewing devices will need to improve as well. Not only are they large and unwieldy, but the thing that makes VR so accessible – your mobile phone – is also one of the bottlenecks to the experience. Magnified by VR lenses, video looks pixelated and low resolution by today’s standards, sometimes exhibiting a ‘screen door effect’ similar to looking through a mesh screen. When Sony introduced a smartphone with a 4K display I initially thought of it as marketing overkill. In retrospect, I don’t know if they had VR in mind, but VR is a case where a 4K phone really could provide an improved experience. Suddenly, I like the idea of a 4K smartphone.

Audio is a much bigger challenge. There’s an old adage in filmmaking, which I’ve discussed on the site before, which says that an audience will forgive a bad picture, but they won’t forgive bad sound. That’s actually more true in VR than on a flat screen because having an immersive experience is absolutely dependent on it. Audio for VR is in its infancy, and spatial audio that matches what you see, including directionality of sound as you move around, is critical to creating a believable experience. One studio executive I spoke with at NAB told me the biggest challenge in creating believable sound for VR is that 70% of viewers don’t actually wear headphones when watching VR, but instead rely on the speaker built into their phone, insuring a suboptimal experience.

|

| Immersive audio, including directional audio, is crucial for VR experiences that include sound. One challenge for content creators is that 70% of VR consumers today use only the tiny speaker built into their phone. |

Finally, there’s the disconnect between the virtual world and the real world. Its frustrating when you’re immersed in a VR experience at NASA Mission Control and you reach for the control panel only to find empty space, or when you’re standing in an open field but inadvertently bump into a wall. Some of this will be addressed through technology, but it’s also one of the challenges content creators will have to address through creative choices.

The good news is that all of these things are solvable problems, and smart people are working on them.

The Future is Now

As exciting as VR is, I’m not suggesting that it will replace traditional, two dimensional media such as photography or television. There’s plenty of room for both mediums to exist side-by-side.

I recently shared this thought with a friend, who thoughtfully responded “Then what type of stories are best told in VR versus other mediums?” After thinking about it I realized this was probably the wrong question to ask. After all, if you replace the word VR in that sentence with any other form of media, like TV or print, it doesn’t make sense. You can tell a story with any medium, but the challenge is figuring out how to leverage the strengths of each for maximum impact. In that sense, VR is no different.

Also, as much as I’m excited by VR, I really don’t want to have complete control over my viewing experience for everything. I want master filmmakers and photographers to craft a story in their image, or to show me the world as they see it, without necessarily giving me the freedom to mess it up. I can’t imagine how The Godfather would be any better if I had the freedom to look around the scene instead of watching it the way Francis Ford Coppola shot it. On the other hand, I look forward to as-yet-uncreated projects that allow me to participate more freely in the experience.

What’s potentially most exciting are the VR applications that haven’t been invented yet. I can’t wait for the day when NASA puts VR cameras on landers going to Mars or the moons of Saturn, allowing me to stand virtually on the icy surface of Titan, gazing out over a methane sea.

And now if you’ll excuse me, I’m off to make my first VR film.

Articles: Digital Photography Review (dpreview.com)

You must be logged in to post a comment.