The post Canon Unveils a Dual Fisheye Virtual Reality Lens, the RF 5.2mm f/2.8L appeared first on Digital Photography School. It was authored by Jaymes Dempsey.

Canon has announced a one-of-a-kind lens for EOS R cameras: the RF 5.2mm f/2.8L Dual Fisheye lens, which looks exactly as strange as it sounds:

And check out the lens again, this time mounted to the Canon EOS R5:

So what is this bizarre new lens? What’s it’s purpose?

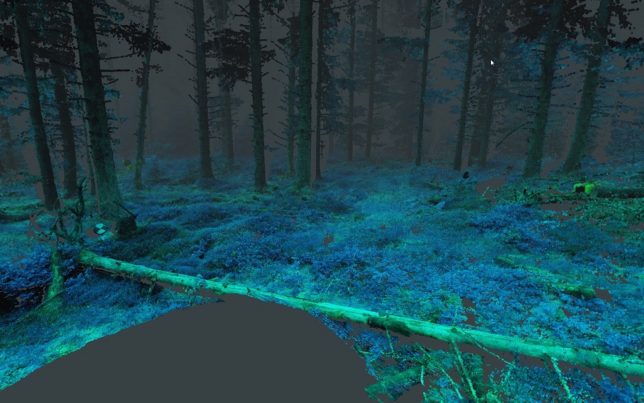

The RF 5.2mm f/2.8L is designed for virtual reality (VR) recording; it’s “the world’s first digital interchangeable dual fisheye lens capable of shooting stereoscopic 3D 180° VR imagery to a single image sensor.” In other words, the twin fisheye lenses offer two frames covering a huge field of view in total; when processed, this footage turns into a single, 180-degree image, and with the proper equipment (the press release mentions the Oculus Quest 2), viewers can feel truly present in the scene.

It seems that, when the RF 5.2mm f/2.8L debuts, it will be available solely for EOS R5 cameras, though this could change once the lens hits the market. Such a unique lens is bound to turn heads, and Canon has certainly been hard at work, offering a product with an outstanding form factor – for filmmakers who record on the go or who simply prefer to minimize kit size – along with weather resistance, a very nice f/2.8 maximum aperture, and most importantly, Canon’s in-built filter system. The latter allows you to use neutral density (ND) filters when recording, essential for serious videographers.

Unfortunately, processing dual fisheye images isn’t done with standard editing software. Instead, Canon is developing several (paid) programs capable of handling VR footage: a Premiere Pro plugin, and a “VR Utility.” The company explains, “With the EOS VR Plug-In for Adobe Premiere Pro, creators will be able to automatically convert footage to equirectangular, and cut, color, and add new dimension to stories with Adobe Creative Cloud apps, including Premiere Pro,” while “Canon’s EOS VR Utility will offer the ability to convert clips from dual fisheye image to equirectangular and make quick edits.”

So who should think about purchasing this new lens? It’s a good question, and one without an easy answer. Canon’s decision to bring out a dedicated VR lens suggests a growing interest in creating VR content. But the day when most video is viewed through VR technology seems a long way off, at least from where I’m sitting.

That said, if VR recording sounds interesting, you should at least check out this nifty new lens. Canon suggests a December release date with a $ 1999 USD price tag, and you can expect Canon’s VR post-processing software around the same time.

Now over to you:

Are you interested in this new lens? Do you do (or hope to do) any VR recording? Share your thoughts in the comments below!

The post Canon Unveils a Dual Fisheye Virtual Reality Lens, the RF 5.2mm f/2.8L appeared first on Digital Photography School. It was authored by Jaymes Dempsey.

This exercise reminds me of light and settings and how the camera works, sure. But more so, it turns every aspect of the image into a purposeful decision. There is no “spray and pray” photography when you are shooting in Manual Mode. Setting your camera to that scary “M” means you grant yourself full control and full responsibility for whatever emerges.

This exercise reminds me of light and settings and how the camera works, sure. But more so, it turns every aspect of the image into a purposeful decision. There is no “spray and pray” photography when you are shooting in Manual Mode. Setting your camera to that scary “M” means you grant yourself full control and full responsibility for whatever emerges.

You must be logged in to post a comment.