|

The Allen Institute for AI (AI2) created by Paul Allen, best known as co-founder of Microsoft, has published new research on a type of artificial intelligence that is able to generate basic (though obviously nonsensical) images based on a concept presented to the machine as a caption. The technology hints at an evolution in machine learning that may pave the way for smarter, more capable AI.

The research institute’s newly published study, which was recent highlighted by MIT, builds upon the technology demonstrated by OpenAI with its GPT-3 system. With GPT-3, the machine learning algorithm was trained using vast amounts of text-based data, something that itself builds upon the masking technique introduced by Google’s BERT.

Put simply, BERT’s masking technique trains machine learning algorithms by presenting natural language sentences that have a word missing, thus requiring the machine to replace the word. Training the AI in this way teaches it to recognize language patterns and word usage, the result being a machine that can fairly effectively understand natural language and interpret its meaning.

|

Building upon this, the training evolved to include an image with a caption that has a missing word, such as an image of an animal with a caption describing the animal and the environment — only the word for the animal was missing, forcing the AI to figure out the right answer based on the sentence and related image. This taught the machine to recognize the patterns in how visual content related to the words in the captions.

This is where the AI2 research comes in, with the study posing the question: ‘Do vision-and-language BERT models know how to paint?‘

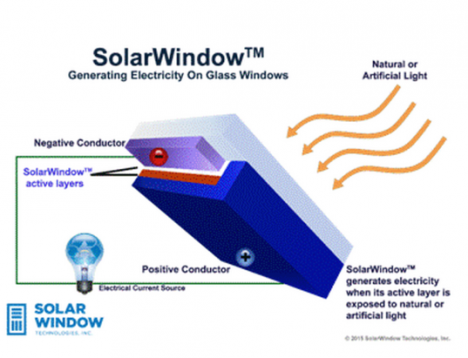

Experts with the research institute build upon the visual-text technique described above to teach AI how to generate images based on its understanding of text captions. To make this possible, the researchers introduced a twist on the masking technique, this time masking certain parts of images paired with captions to train a model called X-LXMERT, an extension of the LXMERT model family that uses multiple encoders to learn connections between language and visual data.

The researchers explain in the study [PDF]:

Interestingly, our analysis leads us to the conclusion that LXMERT in its current form does not possess the ability to paint – it produces images that have little resemblance to natural images …

We introduce X-LXMERT that builds upon LXMERT and enables it to effectively perform discriminative as well as generative tasks … When coupled with our proposed image generator, X-LXMERT is able to generate rich imagery that is semantically consistent with the input captions. Importantly, X-LXMERT’s image generation capabilities rival state-of-the-art image generation models (designed only for generation), while its question-answering capabilities show little degradation compared to LXMERT.

By adding the visual masking technique, the machine had to learn to predict what parts of the images were masked based on the captions, slowly teaching the machine to understand the logical and conceptual framework of the visual world in addition to connecting visual data with language. For example, a clock tower located in a town is likely surrounded by smaller buildings, something a human can infer based on the text description.

|

| An AI-generated image based on the caption, ‘A large painted clock tower in the middle of town.’ |

Using this visual masking technique, the AI2 researchers were able to impart the same general understanding to a machine given the caption, ‘A large clock tower in the middle of a town.’ Though the resulting image (above) isn’t realistic and wouldn’t be mistaken for an actual photo, it does demonstrate the machine’s general understanding of the meaning of the phrase and the type of elements that may be found in a real-world clocktower setting.

The images demonstrate the machine’s ability to understand both the visual world and written text and to make logical assumptions based on the limited data provided. This mirrors the way a human understands the world and written text describing it.

For example, a human, when given a caption, could sketch a concept drawing that presents a logical interpretation of how the captioned scene may look in the real world, such as computer monitors likely sitting on a desk, a skier likely being on snow and bicycles likely being located on pavement.

|

This development in AI research represents a type of simple, child-like abstract thinking that hints at a future in which machines may be capable of far more sophisticated understandings of the world and, perhaps, any other concepts they are trained to understand as related to each other. The next step in this evolution is likely an improved ability to generate images, resulting in more realistic content.

Using artificial intelligence to generate photo-realistic images is already a thing, though generating highly specific photo-realistic images based on a text description is, as shown above, still a work in progress. Machine learning technology has also been used to demonstrate other potential applications for AI, such as a study Google published last month that demonstrates using crowdsourced 2D images to generate high-quality 3D models of popular structures.

Articles: Digital Photography Review (dpreview.com)

You must be logged in to post a comment.