Editor’s note: This is the third article in a three-part series by guest contributor Vasily Zubarev. The first two parts can be found here:

- Part I: What is computational photography?

- Part II: Computational sensors and optics

You can visit Vasily’s website where he also demystifies other complex subjects. If you find this article useful we encourage you to give him a small donation so that he can write about other interesting topics.

The article has been lightly edited for clarity and to reflect a handful of industry updates since it first appeared on the author’s own website.

Computational Lighting

Soon we’ll go so goddamn crazy that we’ll want to control the lighting after the photo was taken too. To change the cloudy weather to sunny, or to change the lights on a model’s face after shooting. Now it seems a bit wild, but let’s talk again in ten years.

We’ve already invented a dumb device to control the light — a flash. They have come a long way: from the large lamp boxes that helped avoid the technical limitations of early cameras, to the modern LED flashes that spoil our pictures, so we mainly use them as a flashlight.

Programmable Flash

It’s been a long time since all smartphones switched to Dual LED flashes — a combination of orange and blue LEDs with brightness being adjusted to the color temperature of the shot. In the iPhone, for example, it’s called True Tone and controlled by a small ambient light sensor and a piece of code with a hacky formula.

|

- Link: Demystifying iPhone’s Amber Flashlight

Then we started to think about the problem of all flashes — the overexposed faces and foreground. Everyone did it in their own way. iPhone got Slow Sync Flash, which made the camera increase the shutter speed in the dark. Google Pixel and other Android smartphones started using their depth sensors to combine images with and without flash, quickly made one by one. The foreground was taken from the photo with the flash while the background remained lit by ambient illumination.

|

The further use of a programmable multi-flash is vague. The only interesting application was found in computer vision, where it was used once in assembly schemes (like for Ikea book shelves) to detect the borders of objects more accurately. See the article below.

- Link: Non-photorealistic Camera: Depth Edge Detection and Stylized Rendering using Multi-Flash Imaging

Lightstage

Light is fast. It’s always made light coding an easy thing to do. We can change the lighting a hundred times per shot and still not get close to its speed. That’s how Lighstage was created back in 2005.

|

- Video link: Lighstage demo video

The essence of the method is to highlight the object from all possible angles in each shot of a real 24 fps movie. To get this done, we use 150+ lamps and a high-speed camera that captures hundreds of shots with different lighting conditions per shot.

A similar approach is now used when shooting mixed CGI graphics in movies. It allows you to fully control the lighting of the object in post-production, placing it in scenes with absolutely random lighting. We just grab the shots illuminated from the required angle, tint them a little, done.

|

|

Unfortunately, it’s hard to do it on mobile devices, but probably someone will like the idea and execute it. I’ve seen an app from guys who shot a 3D face model, illuminating it with the phone flashlight from different sides.

Lidar and Time-of-Flight Camera

Lidar is a device that determines the distance to the object. Thanks to a recent hype of self-driving cars, now we can find a cheap lidar in any dumpster. You’ve probably seen these rotating thingys on the roof of some vehicles? These are lidars.

We still can’t fit a laser lidar into a smartphone, but we can go with its younger brother — time-of-flight camera. The idea is ridiculously simple — a special separate camera with an LED-flash above it. The camera measures how quickly the light reaches the objects and creates a depth map of the scene.

|

The accuracy of modern ToF cameras is about a centimeter. The latest Samsung and Huawei top models use them to create a bokeh map and for better autofocus in the dark. The latter, by the way, is quite good. I wish every device had one.

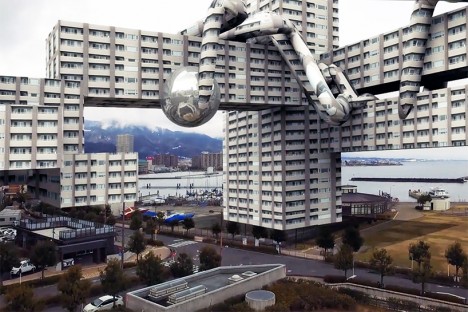

Knowing the exact depth of field will be useful in the coming era of augmented reality. It will be much more accurate and effortless to shoot at the surfaces with lidar to make the first mapping in 3D than analyzing camera images.

Projector Illumination

To finally get serious about computational lighting, we have to switch from regular LED flashes to projectors — devices that can project a 2D picture on a surface. Even a simple monochrome grid will be a good start for smartphones.

The first benefit of the projector is that it can illuminate only the part of the image that needs to be illuminated. No more burnt faces in the foreground. Objects can be recognized and ignored, just like laser headlights of some modern cars don’t blind the oncoming drivers but illuminate pedestrians. Even with the minimum resolution of the projector, such as 100×100 dots, the possibilities are exciting.

|

| Today, you can’t surprise a kid with a car with a controllable light. |

The second and more realistic use of the projector is to project an invisible grid on a scene to build a depth map. With a grid like this, you can safely throw away all your neural networks and lidars. All the distances to the objects in the image now can be calculated with the simplest computer vision algorithms. It was done in Microsoft Kinect times (rest in peace), and it was great.

Of course, it’s worth remembering here the Dot Projector for Face ID on iPhone X and above. That’s our first small step towards projector technology, but quite a noticeable one.

|

| Dot Projector in iPhone X. |

Vasily Zubarev is a Berlin-based Python developer and a hobbyist photographer and blogger. To see more of his work, visit his website or follow him on Instagram and Twitter.

Articles: Digital Photography Review (dpreview.com)

You must be logged in to post a comment.