|

|

One of the biggest frustrations when taking pictures is discovering that your photos are out of focus. Over the past few years, camera autofocus systems from every manufacturer have become much more sophisticated, but they’ve also become more complex. If you want to utilize them to their full potential, you’re often required to change settings for different scenarios.

The autofocus system introduced in Sony’s a6400 as well as in the a9 via a firmware update aims to change that, making autofocus simple for everyone from casual users to pro photographers. And while all manufacturers are aiming to make autofocus more intelligent and easier to use, our first impressions are that in practice, Sony’s new ‘real-time tracking’ AF system really does take away the complexity and removes much of the headache of autofocus so that you can focus on the action, the moment, and your composition. Spoiler: if you’d just like to jump to our real-world demonstration video below that shows just how versatile this system can be, click here.

|

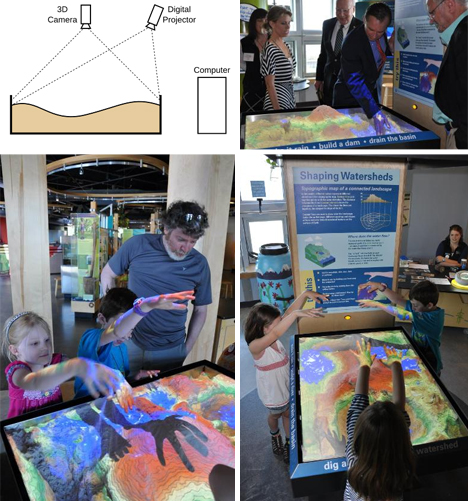

| When I initiated focus on this skater, he was far away and tiny in the frame, so the a9 used general subject tracking to lock on to him at first. It then tracked him fully through his run, switching automatically to Face Detect as he approached. This seamless tracking, combined with a 20fps burst, allowed me to focus on my composition and get the lighting just right, without having to constrain myself by keeping an AF point over his face. For fast-paced erratic motion, good subject tracking can make or break your shot. |

So what is ‘Real-time tracking’? Simply now called ‘Tracking’, it’s Sony’s new subject tracking mode. Subject tracking allows you to indicate to your camera what your subject is, which you then trust it to track. Simply place your AF point over the subject, half-press the shutter to focus, and the camera will keep track of it no matter where it moves to in the frame – by automatically shifting the AF points as necessary. The best implementation we’d seen until recently was Nikon’s 3D Tracking on its DSLRs. Sony’s new system takes some giant leaps forward, replacing the ‘Lock-on AF’ mode that was often unreliable, sometimes jumping to unrelated subjects far away or tracking an entire human body and missing focus on the face and eyes. The new system is rock-solid, meaning you can just trust it to track and focus your subject while you concentrate on composing your photos.

You can trust it to track and focus your subject while you concentrate on composing your photos

What makes the new system better? Real-time tracking now uses additional information to track your subject – so much information, in fact, that it feels as if the autofocus system really understands who or what your subject is, making it arguably the ‘stickiest’ system we’ve seen to date.

|

$ (document).ready(function() { SampleGalleryStripV2({“galleryId”:”2553378816″,”isMobile”:false}) })

Pattern recognition is now used to identify your subject, while color, brightness, and distance information are now used more intelligently for tracking so that, for example, the camera won’t jump from a near subject to a very far one. What’s most clever though is the use of machine-learning trained face and eye detection to help the camera truly understand a human subject.

What do we mean when we say ‘machine-learning’? More and more camera – and smartphone – manufacturers are using machine learning to improve everything from image quality to autofocus. Here, Sony has essentially trained a model to detect human subjects, faces, and eyes by feeding it hundreds, thousands, perhaps millions of images of humans. These images of faces and eyes of different people, kids, adults, even animals, in different positions have been previously tagged (presumably with human input) to identify the eyes and faces – this allows Sony’s AF system to ‘learn’ and build up a model for detecting human and animal eyes in a very robust manner.

Machine learning… allows Sony’s AF system to detect human and animal eyes in a very robust manner

This model is then used in real-time by the camera’s AF system to detect eyes and understand your subject in the camera’s new ‘real-time tracking’ mode. While companies like Olympus and Panasonic are using similar machine-learning approaches to detect bodies, trains, motorcyclists and more, Sony’s system is the most versatile in our initial testing.

|

| Real-time tracking’s ability to seamlessly transition from Eye AF to general subject tracking means that even when there was an eye to track up until this perfect candid moment, your subject will still remain in focus when the eye disappears – so you don’t miss short-lived moments such as this one. Note: this image is illustrative and was not shot using Sony’s ‘Tracking’ mode. |

What does all of this mean for the photographer? Most importantly, it means you have an autofocus system that works reliably in almost any situation. Reframe your composition to place your AF point over your subject, half-press the shutter, and real-time tracking will collect pattern, color, brightness, distance, face and eye information about your subject so comprehensively it can use all that to keep track of your subject in real-time. This means you can focus on the composition and the moment. There is no longer a need to focus (pun intended) on keeping your AF point over your subject, which for years has constrained composition and made it difficult to maintain focus on erratic subjects.

There is no need to focus on keeping your AF point over your subject, which for years has constrained composition and made it difficult to focus on erratic subjects

The best part of this system is that it just works, seamlessly transitioning between Eye AF and Face Detect and ‘general’ subject tracking. If you’re tracking a human, the camera will always prioritize the eye. If it can’t find the eye, it’ll prioritize its face. Even if your subject turns away so that you can’t see their face, or is momentarily occluded, real-time tracking will continue to track your subject, instantly switching back to the face or eye when they’re once again visible. This means your subject is almost always already focused, ready for you to snap the exact moment you wish to capture.

$ (document).ready(function() { SampleGalleryStripV2({“galleryId”:”4012823305″,”isMobile”:false}) })

One of the best things about this behavior is how it handles scenes with multiple people, a common occurrence at weddings, events, or even in your household. Although Eye AF was incredibly sticky and tracked the eyes of the subject you initiated AF upon, sometimes it would wander to another subject, particularly if it looked away from the camera long enough (as toddlers often do). Real-time tracking will simply transition from Eye AF to general subject tracking if the subject looks away, meaning as soon as they look back, the camera’s ready to focus on the eye and take the shot with minimal lag or fuss. The camera won’t jump to another person simply because your subject looked away; instead, it’ll stick to it as long as you tell it to, by keeping the shutter button half-depressed.

Performance-wise it’s the stickiest tracking we’ve ever seen…

And performance-wise it’s the stickiest tracking we’ve ever seen, doggedly tracking your subject even if it looks different to the camera as it moves or you change your position and composition. Have a look at our real world testing with an erratic toddler, with multiple people in the scene, below. This is HDMI output from an a6400 with 24mm F1.4 GM lens, and you can see focus is actually achieved and maintained throughout most of the video by the filled-in green circle at bottom left of frame.

Real-time tracking isn’t only useful for human subjects. Rather, it simply prioritizes whatever subject you place under the autofocus point, be it people or pets, food, a distant mountain, or a nearby flower. It’s that versatile.

In a nutshell, this means that you rarely have to worry about changing autofocus modes on your camera, no matter what sort of photography you’re doing. What’s really exciting is that we’ll surely see this system implemented, and evolved, in future cameras. And while nearly all manufacturers are working toward this sort of simple subject tracking, and incorporating some elements of machine learning, our initial testing suggests Sony’s new system means you don’t have to think about how it works; you can just trust it to stick to your subject better than any system we’ve tested to date.

Addendum: do I need a dedicated Eye AF button anymore?

There’s actually not much need to assign a custom button to Eye AF anymore, since real-time tracking already uses Eye AF on your intended subject. In fact, using real-time tracking is more reliable, since if your subject looks away, it won’t jump to another face in the scene as Eye AF tends to do. If you’ve ever tried to photograph a kids’ birthday party or a wedding, you know how frustrating it can be when Eye AF jumps off to someone other than your intended subject just because he or she looked away for long enough. Real-time tracking ensures the camera stays locked on your subject for as long as your shutter button remains half-depressed, so your subject is already in focus when he or she looks back at the camera or makes that perfect expression. This allows you to nail that decisive, candid moment.

Articles: Digital Photography Review (dpreview.com)

You must be logged in to post a comment.